共计 8464 个字符,预计需要花费 22 分钟才能阅读完成。

上一节把基本的思路理清楚了之后,接下来就开始代码的编写了。

其中要注意的也是爬虫编写中最头疼的问题,就是反爬措施,因为拉勾网对爬虫的反爬手段就是直接封IP,所以我们首先得自己维护一个代理IP池。

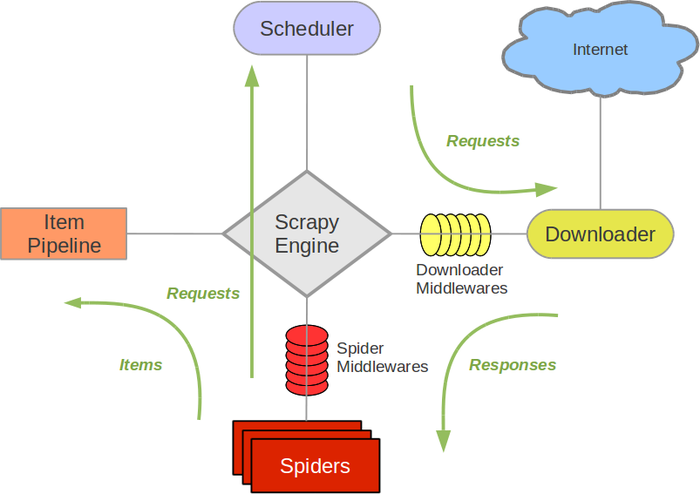

Scrapy基本架构

我们需要在Spiders里面编写爬虫的核心代码,然后在Item、Pipeline分别写入相应的代码,最后重写HttpProxyMiddleware组件。

ip代理

网上有许多卖ip代理的,很贵,效果也不是特别理想,所以就自己撸了个抓取免费代理的包,代码在github上,具体用法在README中也有写。

好了,代理也有了,开始编写代码。

生成一个名为lagou_spider的项目:

scrapy startproject lagou_spider

Items.py

第一步先确定我们的数据结构,定义item

class LagouJobInfo(scrapy.Item):

"""docstring for LagouJobInfo"""

keyword = scrapy.Field()

companyLogo = scrapy.Field()

salary = scrapy.Field()

city = scrapy.Field()

financeStage = scrapy.Field()

industryField = scrapy.Field()

approve = scrapy.Field()

positionAdvantage = scrapy.Field()

positionId = scrapy.Field()

companyLabelList = scrapy.Field()

score = scrapy.Field()

companySize = scrapy.Field()

adWord = scrapy.Field()

createTime = scrapy.Field()

companyId = scrapy.Field()

positionName = scrapy.Field()

workYear = scrapy.Field()

education = scrapy.Field()

jobNature = scrapy.Field()

companyShortName = scrapy.Field()

district = scrapy.Field()

businessZones = scrapy.Field()

imState = scrapy.Field()

lastLogin = scrapy.Field()

publisherId = scrapy.Field()

# explain = scrapy.Field()

plus = scrapy.Field()

pcShow = scrapy.Field()

appShow = scrapy.Field()

deliver = scrapy.Field()

gradeDescription = scrapy.Field()

companyFullName = scrapy.Field()

formatCreateTime = scrapy.Field()

Spiders.py

导入所需要的包之后,定义一个类继承自Spider

# *-* coding:utf-8 *-*

from scrapy import Spider

import scrapy

import requests

import json

import MySQLdb

import itertools

import json

import urllib

from ..items import LagouJobInfo

from scrapy import log

class Lagou_job_info(Spider):

"""docstring for Lagou_job_info"""

name = 'lagou_job_info'

def __init__(self):

super(Lagou_job_info, self).__init__()

# 得到城市名

self.citynames = self.get_citynames()

# 职业类型

self.job_names = self.get_job_names()

# 两两组合城市名和职业类型

self.url_params = [x for x in itertools.product(self.citynames, self.job_names)]

self.url = 'http://www.lagou.com/jobs/positionAjax.json?px=default&city=%s&needAddtionalResult=false'

self.headers = {

'Content-Type':'application/x-www-form-urlencoded; charset=UTF-8',

'Accept-Encoding':'gzip, deflate',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 Safari/537.36'

}

从上面可以看出大致流程是先从数据库中取出职位关键字和城市名,再用itertools.product两两组合起来供start_requests函数调用。

def start_requests(self):

'''从self.parms开始组合url并且生成request对象'''

for url_param in self.url_params:

url = self.url % urllib.quote(url_param[0])

yield scrapy.FormRequest(url=url, formdata={'pn':'1', 'kd':url_param[1]}, method='POST', headers=self.headers, meta={'page':1, 'kd':url_param[1]}, dont_filter=True)

start_requests函数组合好url后生成Requests对象,由parse函数进一步处理返回的Response对象。

def parse(self, response):

'''进一步处理生成的request对象'''

try:

html = json.loads(response.body)

# 可能会出现安全狗的拦截,返回的并不是json数据

except ValueError:

log.msg(response.body, level=log.ERROR)

log.msg(response.status, level=log.ERROR)

# 需要重新生成当前的request对象

yield scrapy.FormRequest(response.url, formdata={'pn':str(response.meta.get('page')), 'kd':response.meta.get('kd')}, headers=self.headers, meta={'page':response.meta.get('page'), 'kd':response.meta.get('kd')}, dont_filter=True)

# 判断当前页是否有内容

if html.get('content').get('positionResult').get('resultSize') != 0:

results = html.get('content').get('positionResult').get('result')

for result in results:

item = LagouJobInfo()

item['keyword'] = response.meta.get('kd')

item['companyLogo'] = result.get('companyLogo')

item['salary'] = result.get('salary')

item['city'] = result.get('city')

item['financeStage'] = result.get('financeStage')

item['industryField'] = result.get('industryField')

item['approve'] = result.get('approve')

item['positionAdvantage'] = result.get('positionAdvantage')

item['positionId'] = result.get('positionId')

if isinstance(result.get('companyLabelList'), list):

item['companyLabelList'] = ','.join(result.get('companyLabelList'))

else:

item['companyLabelList'] = ''

item['score'] = result.get('score')

item['companySize'] = result.get('companySize')

item['adWord'] = result.get('adWord')

item['createTime'] = result.get('createTime')

item['companyId'] = result.get('companyId')

item['positionName'] = result.get('positionName')

item['workYear'] = result.get('workYear')

item['education'] = result.get('education')

item['jobNature'] = result.get('jobNature')

item['companyShortName'] = result.get('companyShortName')

item['district'] = result.get('district')

item['businessZones'] = result.get('businessZones')

item['imState'] = result.get('imState')

item['lastLogin'] = result.get('lastLogin')

item['publisherId'] = result.get('publisherId')

# item['explain'] = result.get('explain')

item['plus'] = result.get('plus')

item['pcShow'] = result.get('pcShow')

item['appShow'] = result.get('appShow')

item['deliver'] = result.get('deliver')

item['gradeDescription'] = result.get('gradeDescription')

item['companyFullName'] = result.get('companyFullName')

item['formatCreateTime'] = result.get('formatCreateTime')

yield item

# 当前页处理完成后生成下一页的request对象

page = int(response.meta.get('page')) + 1

kd = response.meta.get('kd')

yield scrapy.FormRequest(response.url, formdata={'pn':str(page), 'kd':kd}, headers=self.headers, meta={'page':page, 'kd':kd}, dont_filter=True)

需要注意的是,要实现翻页效果,我们使用了meta参数,可以实现参数传递,具体用法请看文档。

到这儿,我们的Spiders核心代码完成。

Pipelines.py

Spiders返回的item需要保存到数据库,就需要通过定义管道功能来实现。

class LagouJobInfoDbPipeline(object):

'''将item保存到数据库'''

def process_item(self, item, spider):

conn = MySQLdb.connect(host='localhost', user='root', passwd='qwer', charset='utf8', db='lagou')

cur = conn.cursor()

sql = 'insert into job_info(keyword, companyLogo, salary, city, financeStage, industryField, approve, positionAdvantage, positionId, companyLabelList, score, companySize, adWord, createTime, companyId, positionName, workYear, education, jobNature, companyShortName, district, businessZones, imState, lastLogin, publisherId, plus, pcShow, appShow, deliver, gradeDescription, companyFullName, formatCreateTime) values(%s, %s, %s,%s, %s, %s,%s, %s, %s,%s, %s, %s,%s, %s, %s,%s, %s, %s,%s, %s, %s,%s, %s, %s,%s, %s, %s,%s,%s,%s,%s,%s)'

key = ['keyword', 'companyLogo', 'salary', 'city', 'financeStage', 'industryField', 'approve', 'positionAdvantage', 'positionId', 'companyLabelList', 'score', 'companySize', 'adWord', 'createTime', 'companyId', 'positionName', 'workYear', 'education', 'jobNature', 'companyShortName', 'district', 'businessZones', 'imState', 'lastLogin', 'publisherId', 'plus', 'pcShow', 'appShow', 'deliver', 'gradeDescription', 'companyFullName', 'formatCreateTime']

for i in item.keys():

try:

item[i] = str(item.get(i).encode('utf-8'))

except:

item[i] = str(item.get(i))

finally:

pass

values = [item.get(x) for x in key]

try:

cur.execute(sql, values)

# log.msg('insert success %s' % item.get('keyword').encode('utf-8') + item.get('city').encode('utf-8') + item.get('positionName').encode('utf-8'), level=log.INFO)

except MySQLdb.IntegrityError:

# log.msg('insert fail %s' % item.get('keyword').encode('utf-8') + item.get('city').encode('utf-8') + item.get('positionName').encode('utf-8'), level=log.WARNING)

pass

conn.commit()

cur.close()

conn.close()

return item

Middlewares.py

定义中间件,把代理功能放进去。

class ProxyMiddleWare(object):

"""docstring for ProxyMiddleWare"""

def process_request(self,request, spider):

'''对request对象加上proxy'''

proxy = self.get_random_proxy()

request.meta['proxy'] = 'http://%s' % proxy

# print 'use proxy'

log.msg('-'*10, level=log.DEBUG)

log.msg(request.body.encode('utf-8'), level=log.DEBUG)

log.msg(proxy, level=log.DEBUG)

log.msg('-'*10, level=log.DEBUG)

# print request.headers

def process_response(self, request, response, spider):

'''对返回的response处理'''

# 如果返回的response状态不是200,重新生成当前request对象

if response.status != 200:

log.msg('-'*10, level=log.ERROR)

log.msg(response.url, level=log.ERROR)

log.msg(request.body.encode('utf-8'), level=log.ERROR)

log.msg(response.status, level=log.ERROR)

log.msg(request.meta['proxy'], level=log.ERROR)

log.msg('proxy block!', level=log.ERROR)

log.msg('-'*10, level=log.ERROR)

proxy = self.get_random_proxy()

# 对当前reque加上代理

request.meta['proxy'] = 'http://%s' % proxy

return request

return response

def get_random_proxy(self):

'''随机从文件中读取proxy'''

while 1:

with open('proxies.txt', 'r') as f:

proxies = f.readlines()

if proxies:

break

else:

time.sleep(1)

proxy = random.choice(proxies).strip()

return proxy

settings.py

BOT_NAME = 'lagou_job'

SPIDER_MODULES = ['lagou_job.spiders']

NEWSPIDER_MODULE = 'lagou_job.spiders'

ROBOTSTXT_OBEY = False

DOWNLOAD_DELAY = 1

DOWNLOADER_MIDDLEWARES = {

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware': None,

'lagou_job.middlewares.ProxyMiddleWare': 750,

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware':None

}

ITEM_PIPELINES = {

'lagou_job.pipelines.LagouJobInfoDbPipeline': 300,

}

LOG_LEVEL = 'DEBUG'

LOG_ENABLED = True

这里需要注意的是放置中间件的顺序,为什么要给750?请看文档middleware,相信看完后印象一定会很深刻。

最后再编写一个启动脚本run_lagou_job_info.py

from scrapy import cmdline

cmd = 'scrapy crawl lagou_job_info -s JOBDIR=crawls/somespider-1'

cmdline.execute(cmd.split(' '))

实现了实时记录爬取进度,随时停止并且可以从上次的进度继续工作。

启动爬虫

python run_lagou_job_info.py

大功告成!

总结

其实爬虫并不难,难的是怎么绕过网站的反爬策略,找准思路,其实很简单。